Bayesian Model Selection: Using Bayes Factors to Compare the Evidence for Different Competing Models

Introduction: The Art of Choosing the Right Lens

Imagine standing in front of a mountain range with multiple camera lenses. Each lens shows a different view—one captures the whole horizon, another zooms into the details of a single peak, and yet another balances both. Choosing the right lens is not about which is “best” universally, but which best captures this particular view.

In data analysis, selecting a model is no different. Bayesian model selection is the art of choosing the right lens to interpret reality, balancing complexity and evidence. Rather than relying on intuition or arbitrary fit scores, it uses Bayes Factors—a numerical compass that guides us through competing explanations of the same data.

The Bayesian Perspective: A Game of Beliefs and Evidence

Bayesian thinking is less about cold equations and more about belief updates. You start with a prior belief—a rough sense of what might be true—and update it using data, forming a posterior belief. In this framework, model selection becomes a contest between hypotheses, each vying for credibility based on the strength of evidence.

Think of it like a courtroom. Each model is a lawyer presenting evidence to the same jury—the data. The Bayes Factor serves as the judge’s evaluation of whose case is more convincing. A high Bayes Factor for one model means the data gives more substantial support to that hypothesis over another.

This probabilistic mindset is central to modern analytics and decision-making—a perspective cultivated in advanced programmes such as the Data Scientist course in Mumbai, where professionals learn not only the mathematics behind Bayesian inference but also its real-world implications in business forecasting and machine learning.

Unpacking the Bayes Factor: The Odds of Belief

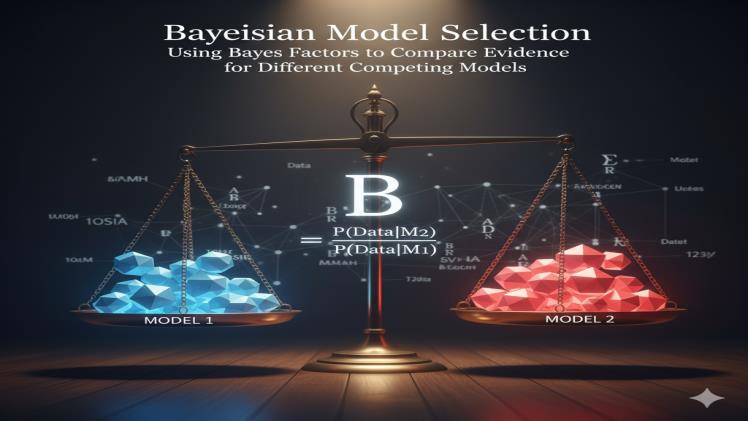

At its core, the Bayes Factor is deceptively simple: it is the ratio of how likely the observed data is under one model compared to another. Mathematically, it measures

BF12=P(Data∣Model1)P(Data∣Model2)BF_{12} = \frac{P(Data|Model_1)}{P(Data|Model_2)}BF12=P(Data∣Model2)P(Data∣Model1)If this value is greater than one, Model 1 is favoured; if less than one, Model 2 gains the edge.

But beyond numbers, it’s a tale of balance. Overly simple models often fail to capture nuances, while excessively complex ones risk seeing patterns in noise. Bayes Factors naturally penalise unnecessary complexity through marginal likelihood, ensuring that a model’s flexibility doesn’t falsely inflate its apparent accuracy.

Imagine two detectives solving a mystery. One keeps theories lean, relying on direct clues. The other builds elaborate conspiracies. The Bayes Factor quietly rewards the first detective when the more straightforward explanation truly fits the evidence better—embodying Occam’s razor in statistical form.

From Likelihood to Evidence: The Hidden Power of Integration

While frequentist methods focus narrowly on point estimates and hypothesis tests, Bayesian model selection integrates over all possible parameter values within a model. This holistic perspective considers every plausible configuration, giving a richer sense of how well the model, as a whole, explains the data.

To visualise this, think of two sculptors. One chisels away at a single block to perfection; the other evaluates dozens of rough forms before deciding which captures the intended shape. Bayesian analysis works like the second sculptor—it doesn’t commit to a single “best” set of parameters but evaluates the total evidence a model can muster across its parameter space.

This elegance, however, comes at a computational cost. Calculating the marginal likelihood requires complex integration—something that once limited Bayesian methods to theorists. But with modern computational advances and tools like MCMC (Markov Chain Monte Carlo), these ideas have become practical for everyday data work.

Model Selection in Practice: Beyond Numbers to Insight

In the real world, the Bayes Factor’s interpretive power shines in fields like genomics, finance, and AI. For instance, when comparing two predictive models for credit risk—one linear and another non-linear—the Bayes Factor quantifies which one the data actually supports, not just which fits historical numbers better.

Interpretation follows a simple guide:

- BF < 1: Model 2 is favoured

- 1–3: Weak evidence for Model 1

- 3–10: Substantial evidence

- >10: Strong evidence for Model 1

Yet these boundaries aren’t rigid laws—they are lenses to view confidence, not handcuffs to interpretation. Data scientists trained in advanced methods, such as those enrolled in the Data Scientist course in Mumbai, learn how to interpret these nuances and apply Bayesian reasoning to complex decision problems where uncertainty is the rule, not the exception.

The Balance Between Fit and Parsimony

One of the most significant challenges in model building is overfitting—a model that performs brilliantly on training data but fails in the real world. Bayes Factors provide a safeguard against this by naturally favouring models that explain the data without overcomplicating it.

Picture two musicians improvising. One adds flourish after flourish until the melody gets lost; the other keeps it evocative yet straightforward. Bayesian selection applauds the latter—it rewards harmony between complexity and clarity. It’s not about finding the most detailed model, but the one that best balances fidelity and generalisation.

Conclusion: The Wisdom of Evidence

Bayesian model selection, anchored by Bayes Factors, teaches a profound truth: science and analysis are not about certainty but about degrees of belief, supported by evidence. In a world where data floods in from every corner—social networks, sensors, markets—this perspective helps us weigh options with humility and precision.

Just as a photographer learns to choose the right lens for each scene, the data scientist knows to select the right model for each problem. The Bayes Factor doesn’t just pick winners—it guides thinkers to appreciate that every model is a story, and the best one is the story the data itself tells most convincingly.